Introduction: Neural networks are a type of artificial intelligence that imitates the functionality of the human brain. It is an essential part of machine learning and deep learning, and it plays a crucial role in solving complex problems that are difficult for traditional programming methods. This article provides an in-depth overview of neural networks, their applications, types, and working principles.

- What are Neural Networks? Neural networks are a set of algorithms that can recognize patterns and relationships in data. They can be trained to identify features and classify data by adjusting the weights and biases of the neurons in the network. Neural networks are inspired by the structure and function of the human brain, consisting of layers of interconnected nodes that process and transmit information.

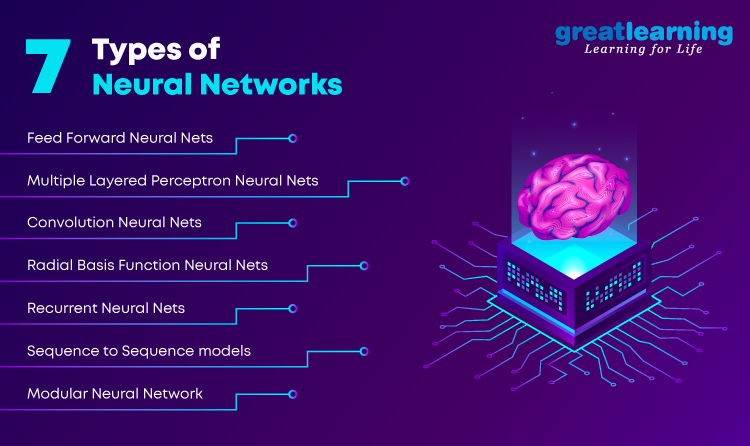

- Types of Neural Networks: There are several types of neural networks, including:

- Feedforward Neural Networks: These networks process data in a single direction, from input to output, with no feedback loop.

- Recurrent Neural Networks: These networks have feedback loops that allow them to process sequential data, such as speech or time series data.

- Convolutional Neural Networks: These networks are designed for image and video processing, identifying and extracting features from visual data.

- Generative Adversarial Networks: These networks are used to generate new data that is similar to the training data, by training two competing networks to generate and discriminate against fake data.

- Autoencoders: These networks are used for unsupervised learning and data compression, by encoding the input data into a lower-dimensional representation and then decoding it back to its original form.

- Applications of Neural Networks: Neural networks have a wide range of applications, including:

- Image and Speech Recognition: Neural networks are used in image and speech recognition systems, such as facial recognition, object detection, and speech-to-text.

- Natural Language Processing: Neural networks are used in language processing tasks, such as sentiment analysis, language translation, and chatbots.

- Financial Forecasting: Neural networks can be used for time series analysis and forecasting, such as predicting stock prices or customer demand.

- Robotics: Neural networks can be used in robotics for object detection, path planning, and motion control.

- How Neural Networks Work: Neural networks consist of layers of interconnected nodes, or neurons, that receive input signals, process them, and transmit output signals to the next layer. The input signals are multiplied by weights and added to a bias term, and then passed through an activation function that determines the output of the neuron. The output of one layer is fed as input to the next layer, and this process continues until the final output is produced. During training, the weights and biases are adjusted through a process called backpropagation, in which the error between the predicted and actual output is used to update the network parameters.

- Advantages of Neural Networks:

- Neural networks can handle complex, non-linear relationships in data that are difficult for traditional programming methods.

- They can learn from experience and improve their accuracy over time.

- Neural networks can generalize to new data, making them robust and flexible.

- They can process large amounts of data quickly and efficiently.

Limitations of Neural Networks:

- Neural networks require large amounts of training data to achieve high accuracy.

- They can be prone to overfitting, where the network performs well on the training data but poorly on new data.

- Neural networks are often considered to be “black boxes,” as it can be difficult to interpret the inner workings of the network and understand how it arrived at a particular output. Neural networks are a powerful tool for solving complex problems in a variety of domains, from image and speech recognition to financial forecasting and robotics. They are inspired by the structure and function of the human brain and can learn from experience to improve their accuracy over the time.

Activation Functions

In neural networks, activation functions are used to introduce nonlinearity into the model, allowing it to model complex relationships between the inputs and outputs. Common activation functions include:

- Sigmoid: This function maps any input value to a value between 0 and 1, and is commonly used in the output layer of binary classification models.

- ReLU (Rectified Linear Unit): This function returns the input value if it is positive, and 0 otherwise. It is commonly used in hidden layers and can improve the training performance of neural networks.

- Tanh (Hyperbolic Tangent): This function maps any input value to a value between -1 and 1, and is commonly used in the output layer of regression models.

- Softmax: This function is used in the output layer of multi-class classification models, and maps the input values to a probability distribution over the possible classes.

Training Neural Networks

Training neural networks involves adjusting the weights and biases of the model so that it can accurately predict the output for a given input. This is typically done through an iterative process, where the model’s predictions are compared to the actual output, and the weights and biases are adjusted accordingly.

The most common method for training neural networks is called backpropagation, which involves calculating the error between the model’s output and the actual output, and then propagating this error backwards through the network to adjust the weights and biases. This process is repeated for many iterations, or epochs, until the model’s predictions are sufficiently

Conclusion

Neural networks are a powerful tool for modeling complex relationships between inputs and outputs. With their ability to learn from data and make predictions, they have a wide range of applications in various fields. As more data becomes available and computing power increases, neural networks are likely to become even more prevalent in the years to come.